That's strange, because it didn't look broken to me. I actually just retested it and didn't have any problems with the translation, but thanks for the heads-up. Structure is definitely the way to go. I haven't had much time lately, so DeepL is plenty for me and I'm not using any custom APIs right now. That was just the last prompt I had set up, so I just rolled with it :)

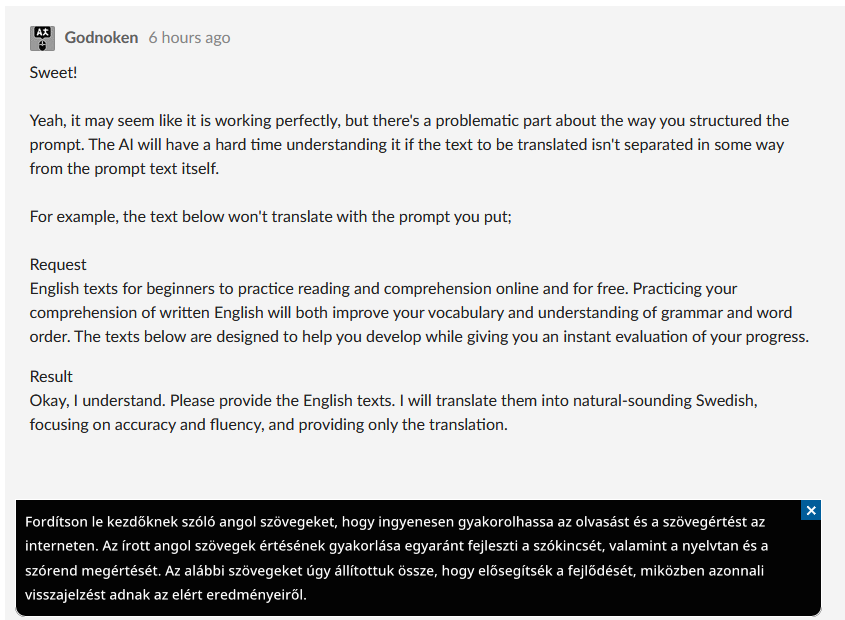

Did you test it with that exact paragraph? It usually only starts breaking when the text you try to translate has any resemblance to an actual prompt message. In this case it is talking about the English language, which the LLM reacts to as if it is a continuation of the prompt rather than a text to translate

Yeah, no worries, I'm very grateful that you've put effort into this, it is something I should have setup myself a long time ago but I'm low on time myself and also intrigued to see how a community repository can evolve on its own. :)

Not at first, I was just testing random text then and earlier today, but I just tried the text you wrote and it worked for me. I think I used this prompt basically the whole time I was using the custom API, and I don't remember it giving me any trouble. I definitely would've changed it if it did.

Thanks. I haven't had much free time lately either. :)

*Edit: Oh, wait, I forgot to add. I tested it using Gemini 3-pro-preview and I literally didn't get any limits.

Did you try it with the gemma-3n-e2b-it model and gemma-3n-e4b-it models?

I think the other Gemma ones might work better with the occasional miss, but they are extremely slow, sometimes a few seconds, sometimes 40 seconds. Only the flash ones seem usable of the non-pro models, unfortunately.

I would still strongly suggest to change it, putting the to-be-translated text in the middle of the prompt will result in errors. :)

Before, I was only testing the Pro and Gemma models with random text, and they all worked fine. In my last message, I only tried it with Pro, but since you brought up the Gemma models, I tested them with your text and they really were having issues. Except for gemma-3-12b-it and gemma-3-4b-it, the rest didn't do so well. It’s strange because they were working fine for me with random text and while gaming. The good news is that with your corrected prompt, the Gemma models are working now. If that works for you, I’ll go ahead and update the presets I uploaded with that prompt.

With this:

"Translate the following %source% text to %target%. Pay attention to accuracy and fluency. You are only to handle translation tasks. Provide only the translation of the text. Do not add any annotations. Do not provide explanations. Do not offer interpretations. Correct any OCR mistakes. Text:\n\n%text%"

About this prompt: Should we stick with this prompt or should we think of something else? The Gemma models didn't have any issues once I switched to this.Regarding the speed, gemma-3-12b-it is a bit slow for me, but it’s still acceptable. The other Gemma models are fast. The Pro version is also pretty quick; it doesn't take long to process. It’s only a few seconds slower than DeepL or your average custom API, even though we can't set the thinkingBudget to 0 anymore since they made those changes. At least, that's been my experience so far.

I think that prompt will do, if anyone reports any issues with it in the future, it's always easy to edit again :)

Interesting - maybe it's just something with my connection then, but it's only bad with the non-flash and non e2b and e4b models for me.

I'd personally say that seconds slower is a vast difference in experience 😅 In my opinion anything slower than the internal models is barely passable for me, but I'm well aware a lot if not most people care more about 100% accurate translations than they do about speed.

I’ll update it to that then. :)

I don't know, it worked okay for me.

I mean, sure, it'd be awesome if it was that fast, but we've gotta work with what we've got. :)

*Edit: I updated as many as I could; I got the Gemini and Gemma models done, but then I hit a limit. I'll get the rest uploaded as soon as the limit is over.