Ah okay, got it!

I went ahead and implemented a way to add custom placeholders in your presets, which will mostly solve the differentiating model issue, as well as regions etcetera.

I'm refraining from adding a new section for api key/models/limits etcetera at the moment. I feel like it should be possible to get most of that information nicely input into the description now that users can click on links & select text from it, and directly paste that into the placeholder input.

I did just get an idea though - it would be cool for the user who creates the preset to be able to 'tag' the model name in the description, which then could be clicked on to automatically be added to a custom %model% placeholder that the user created. Or maybe even making it so that if you tag a line of text in the description, it will show up as a selectable dropdown option connected to the placeholder input.. Not sure if I'm making myself understandable here lol, I'm sleep deprived.

Cheers for the feedback, I'll take a look at making that text more legible. (I really need to get a higher resolution screen..)

Thank you very much for adding these. Please let me know if you find any bugs with the placeholders, but it should work fine afaik.

Sounds good to me.

Okay, makes sense. :)

I think I get it and it actually sounds good, but I’m also super sleep-deprived. 😅

I’ll definitely take a look at it soon.

I have a question though. Since my last upload, I can't upload anything anymore. It says either I'm offline or the server is, but I'm definitely not offline. Is this a server thing or is it just me? I still have several presets that I haven't been able to upload yet.

Hey bud,

I believe I fixed so that you'll be able to see the actual error now when you try to upload. Please let me know if it is still stopping you.

Don't worry about it, but I temporarily downvoted the non-flash Gemini presets due to them not at all generating the translated text properly when testing with paragraphs. Not sure if the models are flawed or if the prompt is. Also, I can personally not make any requests to the Pro versions, as it says that I hit the limit, so I presume they have no free requests at all.

Thank you for putting in effort into this. I'm going to upload some more presets to the LLAMA repository tomorrow, might hit a few Custom API ones too. :)

Hey,

Yeah, it’s actually working now! :)

No worries at all. Gemini's limits got tanked around December, I think, but I just tested all the Gemini and Gemma models and they're literally working perfectly. Pro doesn't really have a big limit anymore, but it’s still going, at least for me. Is it maybe a region thing?

Awesome! :)

Sweet!

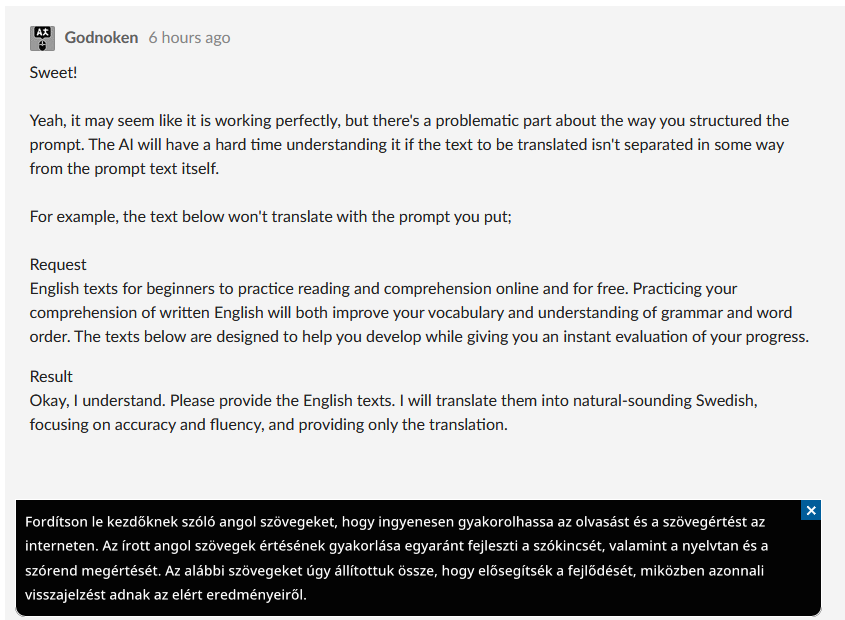

Yeah, it may seem like it is working perfectly, but there's a problematic part about the way you structured the prompt. The AI will have a hard time understanding it if the text to be translated isn't separated in some way from the prompt text itself.

For example, the text below won't translate with the prompt you put;

Request

English texts for beginners to practice reading and comprehension online and for free. Practicing your comprehension of written English will both improve your vocabulary and understanding of grammar and word order. The texts below are designed to help you develop while giving you an instant evaluation of your progress.

Result

Okay, I understand. Please provide the English texts. I will translate them into natural-sounding Swedish, focusing on accuracy and fluency, and providing only the translation.

This prompt below works just fine, although it could definitely be structured better too;

"Translate the following %source% text to %target%. Pay attention to accuracy and fluency. You are only to handle translation tasks. Provide only the translation of the text. Do not add any annotations. Do not provide explanations. Do not offer interpretations. Correct any OCR mistakes. Text:\n\n%text%"

Request

English texts for beginners to practice reading and comprehension online and for free. Practicing your comprehension of written English will both improve your vocabulary and understanding of grammar and word order. The texts below are designed to help you develop while giving you an instant evaluation of your progress.

Result

Engelska texter för nybörjare att öva läsning och läsförståelse online och gratis. Att öva din läsförståelse i engelska kommer både att förbättra ditt ordförråd och din förståelse för grammatik och meningsbyggnad. Texterna nedan är utformade för att hjälpa dig att utvecklas samtidigt som du får en omedelbar utvärdering av dina framsteg.

Structure is key for LLMs, one tiny mistake could be devastating for the results! :)

That's strange, because it didn't look broken to me. I actually just retested it and didn't have any problems with the translation, but thanks for the heads-up. Structure is definitely the way to go. I haven't had much time lately, so DeepL is plenty for me and I'm not using any custom APIs right now. That was just the last prompt I had set up, so I just rolled with it :)

Did you test it with that exact paragraph? It usually only starts breaking when the text you try to translate has any resemblance to an actual prompt message. In this case it is talking about the English language, which the LLM reacts to as if it is a continuation of the prompt rather than a text to translate

Yeah, no worries, I'm very grateful that you've put effort into this, it is something I should have setup myself a long time ago but I'm low on time myself and also intrigued to see how a community repository can evolve on its own. :)

Not at first, I was just testing random text then and earlier today, but I just tried the text you wrote and it worked for me. I think I used this prompt basically the whole time I was using the custom API, and I don't remember it giving me any trouble. I definitely would've changed it if it did.

Thanks. I haven't had much free time lately either. :)

*Edit: Oh, wait, I forgot to add. I tested it using Gemini 3-pro-preview and I literally didn't get any limits.

Did you try it with the gemma-3n-e2b-it model and gemma-3n-e4b-it models?

I think the other Gemma ones might work better with the occasional miss, but they are extremely slow, sometimes a few seconds, sometimes 40 seconds. Only the flash ones seem usable of the non-pro models, unfortunately.

I would still strongly suggest to change it, putting the to-be-translated text in the middle of the prompt will result in errors. :)

Before, I was only testing the Pro and Gemma models with random text, and they all worked fine. In my last message, I only tried it with Pro, but since you brought up the Gemma models, I tested them with your text and they really were having issues. Except for gemma-3-12b-it and gemma-3-4b-it, the rest didn't do so well. It’s strange because they were working fine for me with random text and while gaming. The good news is that with your corrected prompt, the Gemma models are working now. If that works for you, I’ll go ahead and update the presets I uploaded with that prompt.

With this:

"Translate the following %source% text to %target%. Pay attention to accuracy and fluency. You are only to handle translation tasks. Provide only the translation of the text. Do not add any annotations. Do not provide explanations. Do not offer interpretations. Correct any OCR mistakes. Text:\n\n%text%"

About this prompt: Should we stick with this prompt or should we think of something else? The Gemma models didn't have any issues once I switched to this.Regarding the speed, gemma-3-12b-it is a bit slow for me, but it’s still acceptable. The other Gemma models are fast. The Pro version is also pretty quick; it doesn't take long to process. It’s only a few seconds slower than DeepL or your average custom API, even though we can't set the thinkingBudget to 0 anymore since they made those changes. At least, that's been my experience so far.

I still have a couple left. I used the placeholders where they were needed, like updating Azure for the region and language code, but didn't use them anywhere else. I might’ve missed a spot or just forgot, which definitely happens. There’s the Cloudflare one that needs the account_id, but I couldn't upload it yet. Was I supposed to use them somewhere else too?

Ah! I didn't check the Azure ones. I just saw that a good few of the new presets didn't utilize it when users want to change the models.

Also, you probably realize this when you double check, but you can just use %target% for the Azure API endpoint instead of adding a redundant %language% placeholder. Otherwise I think things seem to look fine, I haven't had time to test the new ones though. I'll try to do so today.*Edit, sorry, I thought I had changed it to work for endpoint and headers, but I never did... I'll fix that for the next version.